Programming note: We’ll be off next week (9/26) for vacation but back the week after!

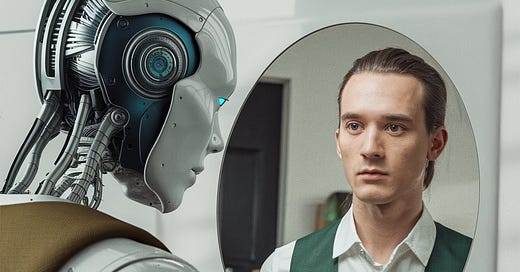

Last week, we mentioned that one of the interesting things that we’ve found in trying other AI products — AI-powered SDRs in particular — is that they make a strong effort to anthropomorphize their assistants. Each one of these tools has a realistic-looking photo of a person and name, and they talk about their product in the way that they’d talk about a person: “When you use so-and-so, s/he will…”

This isn’t something we’ve done at all with RunLLM: Our Slackbot simply uses our logo, and there’s no persona that we’ve created for the product. This hasn’t been an intentional choice per se: It simply never occurred to us that we should put a face and a name in front of our product. This observation got us thinking, however, about whether AI products should try to appear human or not1.

We don’t come this with a strong point of view, but this struck as an important question and a timely one, so we thought it would be worth exploring in more detail.

In the context of AI-powered work, it makes sense to treat an AI-product as a human coworker-as-a-service, so having a human persona in front of your product has a distinct appeal to it — but there are clear drawbacks as well. Having written what’s below, it’s clear there’s no obvious right or wrong answer. We’re thinking about whether we should do this ourselves, so we hope this helps you think through your own priorities.

We’re not psychologists, but what this really boils down is the extent to which people are going to expect anthropomorphized AI systems to act human. If they succeed — providing human-style interactions while responding faster and with more detail — it will create delightful experiences. If they fail, it will leave the user feeling worse off than before.

When you want your product to be treated like a person, you’re also asking for all the variance that humans naturally handle. Everything from handling a short, quick peppering of multiple message in a conversation to pushing back on an idea that’s wrong, asking follow-up questions when they’re uncertain or confused, or handling uncertainty and ambiguity in messages.

Creating the expectation that you’re providing a human-like system that can handle all those inputs means that you have to be able to actually handle all of them! If you don’t, the mismatch between expectations and reality means that you’d have been better off not pretending to be a human in the first place.

The obvious strength of modern AI systems is that they’re very good at handling natural language. Given this strength, convincing your customers to treat your product similar to a human coworker (rather than a bot) is valuable because it’ll make it easier and faster for them to express an idea that your product can understand quickly. That also means that you have to be ready for whatever’s thrown at the product — which might be a screenshot, a link, or in some cases even a voice note.

Designing this well is difficult however. If we just take Slack as an example, a person talking to another person might send multiple messages in a thread and expect that the full content will be taken into account before a response is written. On the other hand, someone talking to a bot will probably be more formal and verbose, expecting that they need to provide more context. Being able to support a more conversation style of interaction will require careful design — it’s something we’re planning on doing at RunLLM but haven’t quite figured out yet. Again if it’s done wrong (e.g., responding too early or waiting too long), it’ll probably be pretty painful.

Whether or not you’re going to be able to do this well in your product is largely going to be mediated by what exactly it is that you’re trying to do. In the case of an AI-powered SDR, the primary modes of interaction are accepting inputs from companies, generating content for prospects, and sharing updates. There isn’t a high level of interactivity, which makes it comparatively straightforward to handle the expected inputs. On the other hand, a truly conversational assistant (like what OpenAI demoed back in April) that’s an expert in a particular domain is likely much harder.

While you don’t want to set expectations too high, you also want to be careful about setting expectations too low. Most AI product builders probably bristle at the idea that their product is “just a bot” — we certainly do.

If you plan to break out of that box (or already are breaking out of it), there’s a clear advantage to setting expectations higher. Yes, you might occasionally miss, but you’re training your customers to treat your system as one that can grow and learn over time, which in turn means that it can support a wider variety of use cases as your product matures. Staying too close to just generating one chunk of output text in response to one chunk of input text runs the risk of your customers believing that’s all you’ll ever do.

That concern may be slightly overstated as written — we all expect the products we use to get better over time and to add new functionality — but we do think it’ll be an advantage for products that set loftier expectations with customers early on, assuming they’re able to live up to those expectations.

As we said, there are no easy answers here. We haven’t chosen yet to create a persona for the RunLLM technical support engineer, and we probably aren’t going to do so in the near future. Our focus is on building out the suite of workflows that will most immediately benefit a support team, and our interaction modes are still heavily conversational. We’ve certainly found that there’s a learning curve that our customers experience as they become better as getting the information they’re looking for from us. Adding a persona would only serve to muddle that.

That, however, is certainly not a permanent decision. As the technology matures, and product best practices mature around it, we’ll likely see this change very quickly. It may be a gradual change over the next five years, or something that happens all at once in response to a dramatic improvement in functionality. Either way — whether you like it or not — we’ll all probably have plenty of anthropomorphized AIs in our lives. The real question is when we won’t be able to tell the difference.

As current and recovering academics, we figured there must be plenty of literature on this. The implication of AI on the field of HCI are massive. A quick search showed us a bunch of relevant research papers. We’re not vouching for any one of these papers, but we thought they were worth calling out.

If AI's become Human then humans have to vacate the Planet Earth !!!!!