How to build your first LLM evaluation

We’ve talked a lot around here recently about the importance of good evaluations for AI products and LLMs. We won’t repeat the full argument, but the short version goes something like this:

For AI to justify the hype, it has to deliver value — real dollars and cents created for customers that wouldn’t have been possible otherwise.

The holy grail is that we’ll see real-world ROI numbers start to emerge for many product categories as customer adoption increases. In the interim, understanding “how good” AI-powered applications are will be a critical, especially as you’re onboarding new customers — in order to get that ROI data.

Generic benchmarks like MMLU and LMSys’ Elo ratings (now Bradley-Terry coefficients) try to measure many different things in a single score and don’t tell us much about particular applications. LMSys is moving in this direction with task-specific Elo scores.

As a result, to really show your customers how good you are, you need thoughtful, custom evaluations of your product, that are custom to what you’re trying to accomplish.

This argument has been directly driven by customer conversations at RunLLM — and to prove our point, we’ve built an evaluation framework for our assistants. We’re starting to roll it out to customers, so we thought we’d share more about what we’ve built and why — in hopes that it’d encourage more of you to be building your own evaluations.

What are we evaluating?

Our motivation to start building our own evaluation framework was consistently getting questions from potential customers about why our system would be better than their (often hypothetical) GPT-4 + RAG solution. For those who’d spend time already evaluating an existing solution, we were able to quickly explain why. For those who hadn’t, it was a more difficult conversation.

We knew from our own internal experiments and comparisons we’d done with early customers that RunLLM was better than generic solutions out on the market, but we didn’t have publicly sharable numbers from most of these customers. More importantly, customers would reasonably point out that one of the issues with AI was that working in one scenario didn’t mean it would work in another — and we didn’t have any numbers that were specific to each customer we were talking to.

Ultimately, our goal here was to show our customers something they immediately understood: We took your product, built an expert on it, and measured how good its answers were. Critically, you can look at our benchmarks and tell us if we were right or wrong based on your own expertise. That’s a necessary first step in building confidence.

How do we evaluate it?

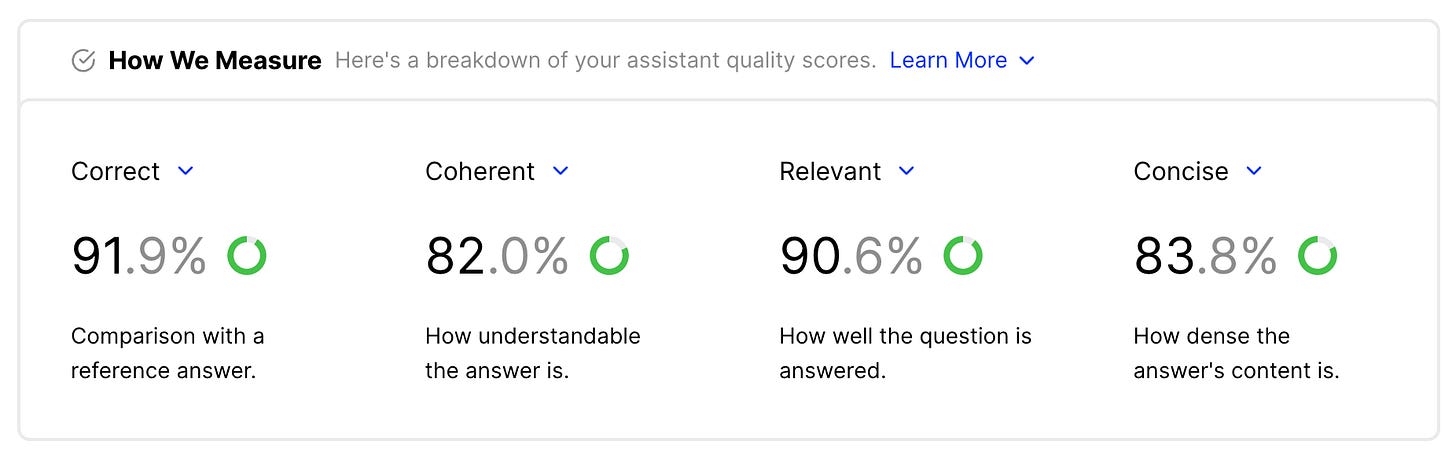

Depending on what you’re building, you might care about everything from tone and style of response to token consumption to latency. For our customers, response quality is generally the most important thing. Based on Joey’s research group’s work on using GPT-4 as a judge and the RAGAS paper, we developed a framework that evaluates our assistant on four criteria: (1) correctness as compared to a reference answer; (2) relevance of the answer; (3) coherence of the concepts; and (4) conciseness.

For each category, we developed a scoring rubric based on our own experience and the results from the RAGAS paper, and we ask GPT-4 to score our responses on each category based on the rubric. We also ask GPT-4 to explain its reasoning for each answer. We use this to generate an aggregate score for each category and a score for each question in the test set. This gives us an overall score for each category as well as an average score for each question in our test set. This allows us to have an aggregate understanding of the assistant’s performance and drill into areas where it can improve.

The test set itself is synthetically generated, using the same techniques we use to fine-tune custom LLMs.

How do we improve it?

Our first cut at the scoring rubric was comically harsh; we generated a 1-5 score for each category and found that the model would never give a perfect score for even the best answers. A few extra words, for example, would drop a score from a 5 to a 3. As a result, we were forced to add more nuance to the rubric and scale each category accordingly, giving the model more options to assign intermediary scores.

This has gotten us to a reasonably good place. Here’s an evaluation we recently generated:

Of course, there’s a lot more for us to do from here.

What’s next?

Even while reading this, you probably were thinking about ways in which we could improve this framework. We have a ton of ideas — here are some of our top priorities:

Better calibration. As of today, our evaluation framework has never given us a perfect score on correctness (though it gives us plenty of 95s). We’re, of course, biased, but we regularly give precise, correct answers that cover user questions clearly. The scoring criteria themselves need further refinement to avoid confusion and negativity bias.

Ensembling results. We’ve found that small input changes can lead to wild output score reductions. We’ve even found cases where an answer that’s more concise than our reference answer is given a low conciseness score for incomprehensible reasons. Much of this seems to boil down to stochastic LLM behavior. Recent results on LLM juries from Cohere are promising — ensembling the results from multiple models reduces variance and eliminates cases where model randomness leads to poorly understood scores.

Identifying weak points. As mentioned above, our judging framework generates a reasoning for each score it gives — but on a test set of 50 questions, no one wants to read 200 explanations. Using low scores to identify and summarize key weak points will better help both us and our customers understand the assistant’s key weak points and how it could be performing better.

Open source. As we’ve developed our framework, we’ve realized that it’s likely generally applicable outside of the scope of our product. With some of the robustness improvements described above, we plan to open-source it this summer — ideally with the community helping improve its accuracy and adding further nuance to our evaluations. As a part of this effort, we’re also considering adding a more nuanced set of metrics; we’d love your suggestions!

We’ve been advocating for better evaluations for a few months now, but we don’t think we have all the answers. Every product category will have different concerns and will require different metrics. We know we’re missing plenty for RunLLM too! All that said, we think this is a critical first step, and we’re excited to start sharing how we’re thinking disciplined evaluations. If you’re curious to learn more or interested in collaborating, please reach out!