Does AI quality matter?

Yes, it does — depending on what you want

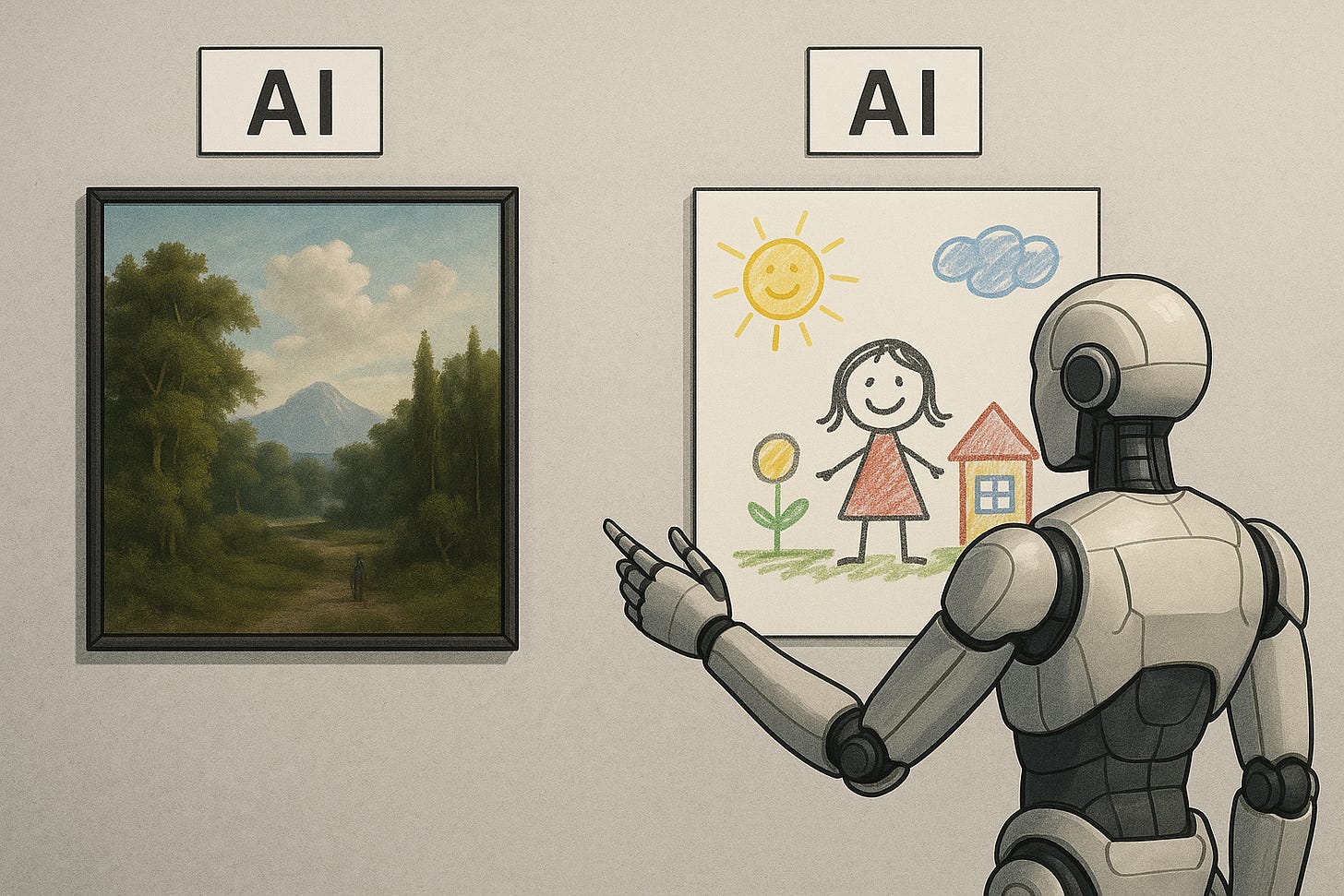

There’s a strange divergence in AI nowadays. On one hand, we see the frontier model labs competing to win math olympiads and solve problems that almost every one of us would have no chance at even understanding. On the other hand, we’re inundated with AI slop. Our inboxes are full of generic-sounding pitches that don’t really explain what each product does. Our LinkedIn feeds are full of posts with completely nonsensical juxtapositions: “This is a copilot. It’s a closer.” And we’ve all seen the amusing X threads about coding agents dropping prod databases.

This has led us to wonder whether people care about the quality of LLM products anymore. Is it enough to have something that generates text and tosses it out into the universe? Or is there value to building a thoughtful application that produces high-quality, trustworthy results. As the founders of a company that’s building a product which prioritizes quality above everything else, you can imagine that this is an existential question: If the frontier model labs are off to solve humanity’s most complex problems, and everyone else is using the cheapest AI product they can find, where does that leave us?

We’re firmly betting that AI quality does still matter, and that despite the fact that we’re seeing an explosion of apps and products that treat a single API call to GPT-4.1 as the end-all-be-all, that’s not where the market is going to land.

As we started thinking about this, we wondered whether there was an analogy we could draw to a previous platform shift — web, mobile, cloud, etc. The closest we got was with the explosion of cheap Flash-equivalent games on the iPhone circa 2010, but the key difference was that the existence of those apps didn’t make people think that the iPhone was a crappy platform that they should dismiss. We could torture the analogy further, but we figured we’d make the argument from first principles instead.

Our thesis is that building high-quality AI applications inherently requires more specialization, which means it’s comparatively more complex to see value immediately; however, over the next couple years, these are the products that are going to show the most value.

When it’s hard to show value

Why do we see so much AI slop? It’s not that there aren’t systems that can’t generate good results. Instead, there are just so many AI products out on the market, it’s hard to know at first what’s good and what’s not. Just yesterday, a customer told us about a product they finished evaluating that made lofty claims about all the problems it could solve — it proceeded to solve none of them. But the difference between high-quality and low-quality isn’t obvious at first glance.

Interestingly, we’ve found that higher-quality products often become harder to explain. We were super excited about the launch of RunLLM v2 last week: The new features we built were super powerful, but they made the product harder to adopt from a self-serve perspective. For example, the feature we’ve gotten the most positive feedback on is log analysis + debugging — RunLLM can now integrate with tools like Datadog and Grafana, pull the relevant logs for a job that didn’t work, and help you figure out how to fix your issues. The thing with that feature is that it’s not the first thing that a Support Engineer or an SRE is going to set up; first you’ll add your docs site, then you’ll explore additional static data sources, and only later, once you’ve established a baseline level of trust in the product, will you come to the more advanced features.

This points to a universal product principle for us: The more complex a product is, the more hands-on the sales process is going to be. The simple products — add your docs + set up a generic chatbot — are easy to demo but also easy to commodify. The more interesting features require more work to set up and require a bigger investment. These features are the ones that are the most specialized to a particular function within support & SRE.

There’s no inherent good or bad here — it’s just a fundamentally different motion. The commodified products are going to be more generic and will get better as LLMs get better, and they are going to be cheaper products to buy. The more powerful and more specialized products will command a higher premium but will require more hands-on time to set up.

The value vs. price tradeoff

So why are we seeing so much comparatively low-quality AI-generated content out in the world? The short answer is because it’s cheaper than ever to generate it. If you believe that posting on LinkedIn 10x a day will get you a following regardless of what you say — or that blanketing prospects with emails will get you leads — it’s a rational choice to turn up the volume on the content and do it for pennies.

If, on the other hand, you care about the quality of the experience your customers or the fidelity of the results of a coding agent, you’re going to naturally be willing to pay more for the results. The expectation of those results will be much higher — not just better AI responses but also better integration, control, and visibility over agent behavior. You’re not going to get those things for $12 a month, but you will for $100k a year.

Again, there’s space in the market for both of those things, but as a customer, you should be clear about what you’re looking for, and as a builder, you should be clear which niche you’re going to go after.

Quality will win

With all that said, we do think there will be a clear difference between the commodity apps and the high-quality apps in the long run. Our hunch is that the apps that are pitched as “chatbots for X” will likely be a race to the bottom. Core models will get better, and it’s likely that the base search + generate pattern will be eventually Sherlocked by one of the hyperscalers or frontier model labs.

On the other hand, building workflows tailored to different teams and integrated into existing tooling will be significantly harder to replicate (though not impossible). More importantly, this is where real ROI is going to be shown — whether its tickets deflected, alerts triaged, or leads generated. By focusing on those key business metrics, this type of product will not only be stickier, it will create a positive feedback loop where understanding business problems better leads to more robust solutions.

Ultimately, quality does matter — if you’re trying to show real ROI. There are cases where checking a box with a minimally viable feature is fine. Not everything needs to be optimized to be perfect quality. Truly powerful products — perhaps as always — aren’t going to be set up with a point-and-click, but that doesn’t mean that the capabilities which can add real value to your workflows don’t already exist.

High-quality AI applications likely will require customization and refinement — but if they can solve your problems, they’re absolutely worth the effort.